It's finally time to get back to the series of posts on the essential physics concepts that will allow you to interpret and differentiate between acquisition artifacts. There are another five or six posts in this background series, so bear with me. After that we will shift gears and look at "good data," taking some time to assess the normal variations that you can expect to see in time series EPI, and then I promise we'll look at artifacts themselves.

When reality meets imagination

Before we go any further there are a few mathematical properties we need to review. These are actually quite simple relationships that, for the most part, can be explained via a handful of pictures. Like this one....

|

| Maths dude, chillin'. |

Complex numbers

The name notwithstanding, complex numbers are quite straightforward to understand from a physical perspective, with a tiny bit of explanation. By the time you've finished reading this post you should have a basic idea of what complex numbers mean and where they come from (they arise quite naturally, as it happens), but for now I am simply going to define some relationships. Hang in there.

We start by defining a so-called imaginary number as any number for which the square is negative. The squares of real numbers - the ones you're used to in everyday life, such as 2, 8.73, -7, pi, and so on - are always positive, whether the number being squared is positive or negative. Thus 2x2 = 4, 8.73x8.73 = 76.2129, -7x-7 = 49 and so on. Squaring a negative number results in a positive number. So how could we possibly get an answer of -4 or -25 out of any square?

To achieve the definition of an imaginary number we simply create a unit, i, known as the "imaginary unit," such that ixi = -1. Thus, we can also see that the square-root of -1 is i. Keeping these two relationships in mind we can quickly write down increasing powers of i as follows:

|

| Courtesy: Karla Miller, FMRIB, University of Oxford. |

What we're seeing is that increasing powers of i are either real or imaginary, where ones and minus ones are clearly real and already familiar to us. So, let's now separate the real from the imaginary parts for each of the powers of i in the above series. We're just going to rewrite the above list and assign a real and an imaginary part, one or both of which can be zero:

|

| Courtesy: Karla Miller, FMRIB, University of Oxford. |

Note that this is exactly the same series as we just saw, only the real and imaginary parts are separated into two columns. Now we can see that the real part of this power series goes in the order 1, 0, -1, 0, 1, 0, -1, 0. Simultaneously the imaginary part, in terms of i, goes in the order 0, 1, 0, -1, 0, 1, 0, -1. Let's plot these two sets of terms (real and imaginary) against the power of i that each pair represents:

|

| Courtesy: Karla Miller, FMRIB, University of Oxford. |

Things are starting to look a lot more familiar now, eh? Let's fill in the plots for non-integer powers of i, thereby revealing two very familiar waves:

|

| Courtesy: Karla Miller, FMRIB, University of Oxford. |

You should recognize two familiar waveforms: a sinusoid in yellow and a cosinusoid in blue. These two waveforms have the same frequency and differ only in the "starting point" of the waves; when the sinusoid is at zero the cosinusoid is already at a positive maximum. In other words, the sinusoid lags the cosinusoid by a quarter cycle. We'll come back to this lag, or phase difference, below.

Okay, so in this power series of i you saw how even powers of i were real (no imaginary component) and that odd powers of i were imaginary (no real component). Indeed, we can define any number as a complex number having a real plus an imaginary part, even if the real or the imaginary part (or both) are zero! (The real number that has no real or imaginary part? Zero itself.) If the imaginary part is zero then we call it a real number, whereas if the real part is zero we call it an imaginary number. It's all just nomenclature.

Real and imaginary, meet magnitude and phase. Oh, I see you've already met!

You've just seen a definition of complex numbers without seeing what they mean in physical terms. Why would we need an imaginary component of any number? Perhaps it's something to do with fantasy, where the real part of your salary is that which barely covers your rent or mortgage, the imaginary part is what you would like to spend on a new car or a vacation in Bali? Not quite.

Let's introduce two other concepts that will likely be a lot more familiar to you. It turns out that these properties - magnitude and phase - are equivalent to the previous real and imaginary description. So I'll pick up where I left off in the previous description and simply make a plot of a complex number, 6 + 5i. Recall that this number has a real part of 6 units and an imaginary part of 5 units. For the plot let's make the x and y axes reflect the real and imaginary components, respectively:

|

| The complex number, 6 + 5i |

This graphical representation is known as the complex plane, since it plots complex numbers. A general illustration of the complex plane looks like this (stolen from Wikipedia):

But let's stick with the 6 + 5i example and work it through. (I prefer worked examples!) Okay then, now that we have a vector making an angle, theta, with the x axis, we can use basic trigonometry (I'm sure you remember x = r.cos(theta), y= r.sin(theta) and tan(theta) =y/x from high school) to fill in the missing parts:

Now we can see that the magnitude of the complex number, 6 + 5i is actually 7.8 (ish). What about that angle, theta? What does that mean? To understand the angle as well as the magnitude we need to do the reverse of what we've just done, i.e. start with a vector making an angle, theta, and resolve the x and y components. This is achieved by what's called Euler's formula, exp(i.theta) = cos(theta) + i.sin(theta):

In this representation the circle is a unit circle (r=1). The (real,imaginary) coordinates for any point on the circle can be computed by r.cos(theta) and r.sin(theta). In my example where r=7.8 the circle has a larger radius but the trigonometric relationships are the same; all we've done is to multiply both sides of the Euler formula by 7.8. (See Note 1.)

Now let's do something slightly different. Consider the figure below. We have a vector with magnitude A which lies initially at the green position. To rotate the green A vector to the red position, all we need do is change the angle, phi, between the two; the magnitude doesn't change. To make this rotation happen we simply multiply the green vector A by a phase factor, or phase term, exp(i.phi).

Summing up the last two manipulations, we see that if we want to make the vector's magnitude bigger (or smaller) we just multiply by a real number. And if we want to change the vector's position in the complex plane then we multiply by a term of the form exp(i.phi), where phi can be any angle at all between negative and positive infinity. When the phase multiplication exp(i.phi) = i then the vector rotates exactly 90 degrees in the counter-clockwise direction. When exp(i.phi) = -1 the vector rotates exactly 180 degrees. When exp(i.phi) = -i the vector rotates 90 degrees in the clockwise direction (which is equivalent to a 270 degree rotation in the counter-clockwise direction). Easy, right? If you get nothing else out of what you've read so far, at least remember this: any time you see a term exp(i.blah) appear in an MR signal equation, recognize that some sort of phase shift is happening.

Frequency is rate of change of phase. Really.

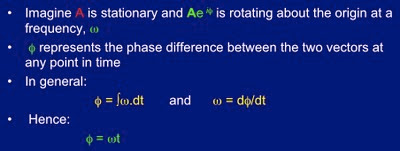

Now the final relationship for today's post. Instead of making the vector, A jump in discrete hops by multiplying by fixed amounts of phase, exp(i.phi), imagine instead that it is rotating steadily about the origin at a constant frequency. In this situation the angle being subtended between the vector and the +x axis is changing continuously. In this situation we can define an instantaneous phase angle, phi, but a nanosecond later the angle has changed!

Go back to the last figure above. If the green vector, A is rotating then we can define the following properties:

Let's work through the last two bullet-listed items above. Consider the right-hand yellow equation again. It says that the faster the phase angle, phi changes with time, the higher the frequency. Well, of course! The phase angle between the red and green vectors evolves slowly with time when the precession of the green vector is slow. And in fact the frequency of rotation is given exactly by the time differential of the phase evolution. In words: frequency is the rate of change of phase.

Next look at the left-hand yellow equation. It's simply the time integral of the right-hand equation, of course. Solving the integral for real values of time, t turns out to be very straightforward (even for someone as mathematically challenged as me!). At any time, t after the start of rotation, the angle between the red and green vectors will be given by phi = w.t, the equation given in green in the last bullet point. Not only is the total phase evolution at time t the time integral of all prior rotations (or phase evolutions, if you prefer), it's simply the product of the frequency and the time period, t. You should be able to see how a rotation rate of one cycle per second will return the green vector to its starting point after exactly 1.2 seco... No, that can't be right! It returns to its starting point after exactly 1.000000 seconds if its frequency is 1 cycle/sec, or 1 Hertz (Hz). If the frequency was halved, to 0.5 Hz, then in one second the green vector would only rotate by 180 degrees, and so on.

As a final mental exercise, imagine that you are looking along the +x axis in the complex plane. A vector of magnitude A is set to rotate about the origin at a frequency, w. If A is to trace a sinusoidal waveform from where you're looking, where would A be located at t=0? Or, if A is to trace a cosinusoidal waveform instead, with the same frequency, w, where would the vector A be located at t=0? (See Note 2 for the answers.)

i think therefore i, uh.....

Recall that we started out with what seemed like an arbitrary definition of i. We ended up seeing that i is necessary to describe the phase relationship between waves. The only difference between a sinusoid and a cosinusoid having the same frequency and magnitude is their phase. If there was no i there would be no way to characterize this phase difference, and we'd be stuck. Hopefully you now see that i arises as naturally as do integers, fractions, negative numbers and other concepts you already use daily. Expect to see i, or phase, any time you're dealing with time-dependent variables (waves) in science, too. They come up in electrical engineering, quantum mechanics and, yes, in MRI physics. Next time we'll see how the frequency, phase and magnitude information can be represented in convenient forms, one of which turns out to be...... an MR image. (What, you thought I was taking you through this i stuff for fun???)

-------------------

Notes

1. I am using the notation exp(i.a) to represent e to the power i.a because Blogger doesn't have a facility to represent exponents. I could have used e^(i.a) I suppose, but I decided to go with exp for convenience. Why is it e, the base of the natural logarithm, that appears in Euler's formula? e is one of Nature's constants, like pi, that seems to exist to define our reality. I don't know much about it, to be honest. But if you'd like to know more you could do worse than to start reading here.

2. For a sinusoidal wave, which yields zero amplitude at t=0 and which is at positive maximum amplitude a quarter cycle later, the vector A would start out along the -y axis.

For a cosinusoidal wave the vector A starts along the +x axis, yielding a positive maximum amplitude to the observer at t=0. A quarter turn later (90 degrees of phase evolution) the vector is along the +y axis, yielding zero amplitude, then another quarter turn later A points along -x, giving negative maximum amplitude. This is why we can refer to a sinusoid as having a "90 degee phase lag" relative to a cosinusoid of the same frequency. And just like that the concept of phase becomes useful to us and, hopefully, just as intuitive a property of waves generally as frequency and amplitude.

|

| Sinusoid - light blue. Cosinusoid - yellow. |

No comments:

Post a Comment